SSD Review Background, Setup, and Methodology v1.0

How I intend to perform my tests on my first batch of SSDs.

Dec 22, 2020

- Benjamin Wachman

Tags:

#Methodology-v1

#Testbed-v1

#SSD

Over the next weeks and months it is my goal to publish several SSD reviews. A collection of benchmarks is, by itself, not super meaningful unless you know what the rest of the test environment looks like and how testing was performed.

Initially, I was looking to buy one or more niche M.2 SSDs for a project using an Asrock Z97E-ITX/ac motherboard. That board only accepts M.2 drives which are either 2230 or 2242 form factor drives – not the more common 2280 drives. I quickly discovered that I couldn’t find any meaningful comparisons or reviews online due to most of the drives being OEM and not available at retail. I decided to buy a sample of several of the drives in that segment and test them myself. With all that work done, it didn’t seem too far out of my way to publish those results. Now, after several months of alternating procrastination, learning, and progress here we are.

Though my initial intention was to find which drive(s) would perform best as an OS/Boot drive, since I’m now going to have a nice handful of extra drives I’m also interested in finding which drive(s) would be best suited for use in an external enclosure for large file transfers.

Without further ado, lets dive into the details of the testbed I’ll be using for my initial round of SSD Tests…

Testbed

| Testbed v1.0 | |

|---|---|

| CPU | Xeon E3 1285 v4 (SR2CX) |

| Cooler | Intel Stock with copper core |

| Motherboard | Asrock Z97E-ITX/ac |

| RAM | 1x8GB Corsair DDR3 1866 CL9 |

| GPU | Iris Pro P6300 |

| Boot Drive | SK Hynix BC501A @ PCIe 2.0 x2 |

| PSU | Dell 305W 80 Plus Bronze |

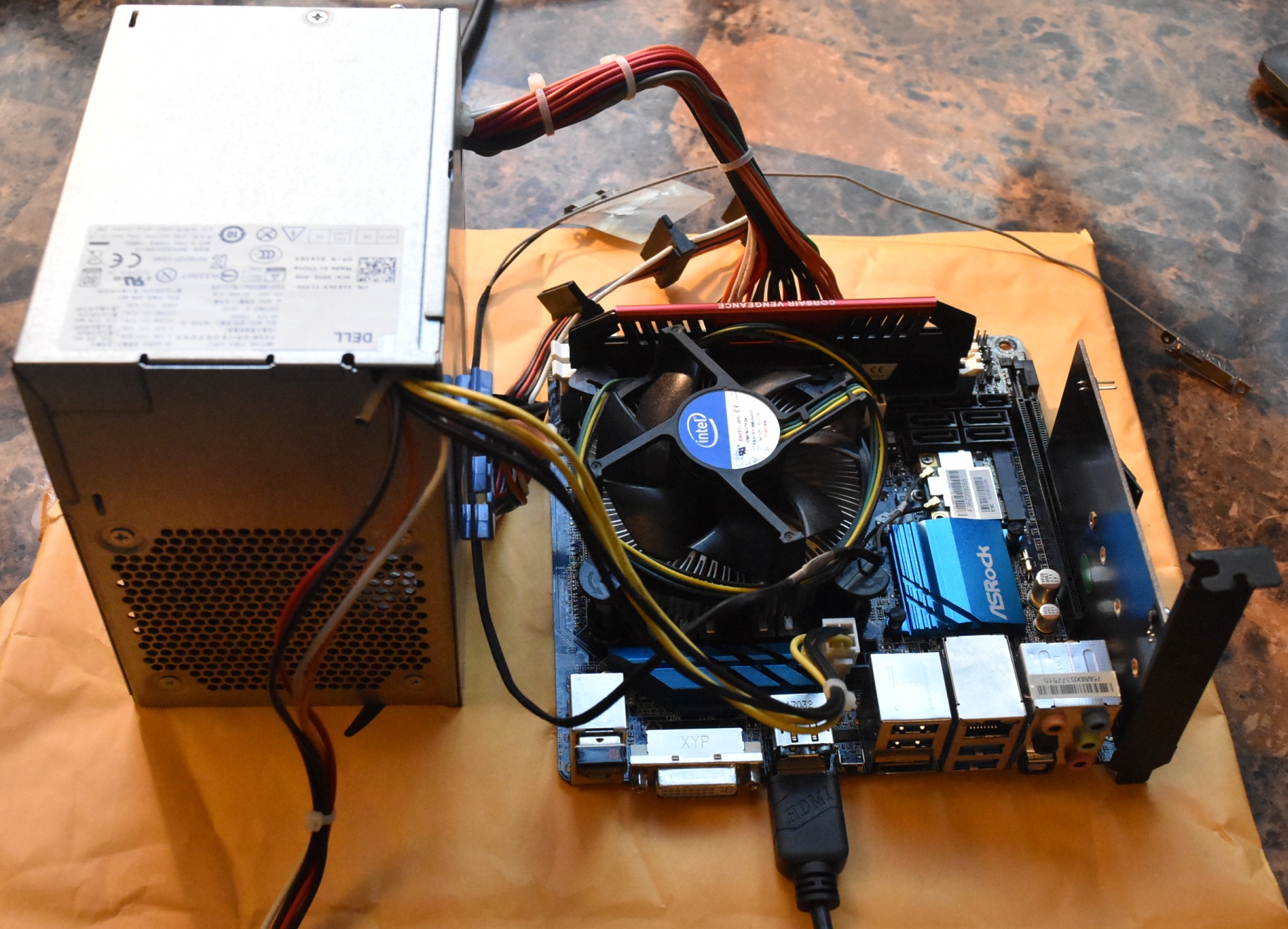

| Case | Open Air |

| OS | Windows 10 x64 Build 2004 |

| Misc | Rivio PCIe x4 M.2 Riser Card |

In addition to only accepting smaller 2230 or 2242 sized M.2 drives, the Asrock Z97E-ITX/ac also limits the PCIe bandwidth on its M.2 slot to PCIe 2.0 x2, meaning that at best the drives would only achieve 1GB/s. Drive performance results with this bottleneck would provide pretty meaningless comparison numbers so I planned to use an Asrock Fatal1ty X370 Gaming X that I picked up for super cheap for a different project. The Fatal1ty had 2x M.2 slots, including one connected directly to the CPU PCIe lanes that provided PCIe 3.0 x4 bandwidth. For those of you that don’t have the per-generation bandwidth of the various iterations of PCIe memorized, PCIe 3.0 is good for a bi-directional 985 MB/s per lane (minus overhead), so a 3.0 x4 connection has a theoretical maximum throughput of 3940MB/s. A x2 connection has exactly half that bandwidth available.

So I dropped in my spare Ryzen 3 1200, 2x16GB DDR4 2666, a Radeon HD 8570 graphics card, a SATA SSD boot drive, and began testing. Unfortunately, about half-way through my first round of testing I ran into problems. No matter what I tried, I couldn’t get the board to POST with two of the drives. I tried the drives in both of the M.2 slots and in an M.2 riser card that I had, also in various slots. No dice. So I had to change gears and use the Z97E-ITX/ac after all, but with the riser card. The riser card has no logic on it and won’t affect performance. It is just a form factor adapter so that you can use a PCIe M.2 drive in a traditional PCIe slot.

As it turns out, the Z97E has a BIOS bug that prevents it from booting from NVMe M.2 drives whether they’re in the M.2 slot or in the x16 slot. I found the resources to mod and reflash the BIOS (yeah, I know, I know UEFI) to fix compatibility, but there’s definitely some risk involved if you’ve never done it before. If you’re trusting of the community, there are also pre-modded BIOS available. Thankfully I didn’t have any other compatibility issues and was able to complete all of my testing on that setup.

Performance results between the X370 testbed and the Z97 testbed were pretty close, but all reported test data is from the Z97 platform, dubbed “Testbed v1.0”. Currently the Testbed is limited to PCIe 3.0. In the future, I intend to migrate to a newer platform with PCIe 4.0 to better test new drives, but first I plan to gather a couple more sets of data.

The Tests

I plan to evolve these tests over time to try to provide some more real-world uses, but for now I’m relying relatively heavily on synthetic benchmarks and timed file-transfer tests.

| The Tests - Table | |||

|---|---|---|---|

| Tool | Purpose/Test | Protocol | Notes |

| AIDA64 Linear Write | Sequential and Random Reads and Writes | Run once after erase, TRIM, and idle to garbage collect | |

| AS-SSD | Sequential and Random Reads and Writes | Run 5x and average all runs | |

| PCMark8 Storage | Supposedly "real world" disk access traces | Run once - internally loops 3x | Doesn’t show much differentiation between drives |

| Large-file transfer | Transfer VMWare VM of 29.7GB / 250 files | Run once | Read speed is limited to ~750MB/s by 2.0 x2 drive interface |

| Small-file transfer | Transfer Steam Library of 7.4GB / 21k files | Run once | Read speed is limited to ~750MB/s by 2.0 x2 drive interface |

| Large-file on-disk copy | Copy VMWare VM 29.7GB / 250 files | Run 4x and average all runs | |

| Small-file on-disk copy | Copy Steam Library of 7.4GB / 21k files | Run 4x and average all runs |

The main reason for the Large-file Transfer and Copy tests are due to my own personal experience. For work, where I do a lot of virtualization, I was super excited when I was able to buy a 2TB Samsung 960 PRO (for an eye-watering $1400 IIRC) back when it was new. I was looking forward to being able to clone my VMs much more quickly than with my previous SATA SSD, since peak read-and-write performance was so high. But I was supremely disappointed when copy speed on large sequential operations would often only reach about 650MB/s. Hence these tests.

I’m not sure why I decided that doing a single iteration of the Source-to-Target transfer tests would provide data I was confident in. I measured it because why not? I had to copy the data over to the target drive to perform the Copy tests anyway. I figured that because the flawed test methodology of having read speeds capped to ~750MB/s would result in low quality data anyway, I didn’t want to put too much time into it. This was before I’d decided to use error bars on the tests with multiple runs, and I didn’t see the value, but the absence of error bars is a bit conspicuous. I’ll remedy this in future tests and will of course need to re-run everything once I have a proper testbed where the Source drive on a file-copy operation isn’t clearly a bottleneck.

As with Testbed v1.0, I intend to gather some more data with the existing methodology to have a larger set of drives to compare against before revising The Tests v1.0.

If you’ve made it this far, then you’re definitely ready to read my first actual review: Compact M.2 SSD Roundup — Short But Sweet?.